Case study · Side project

Splash

Splash

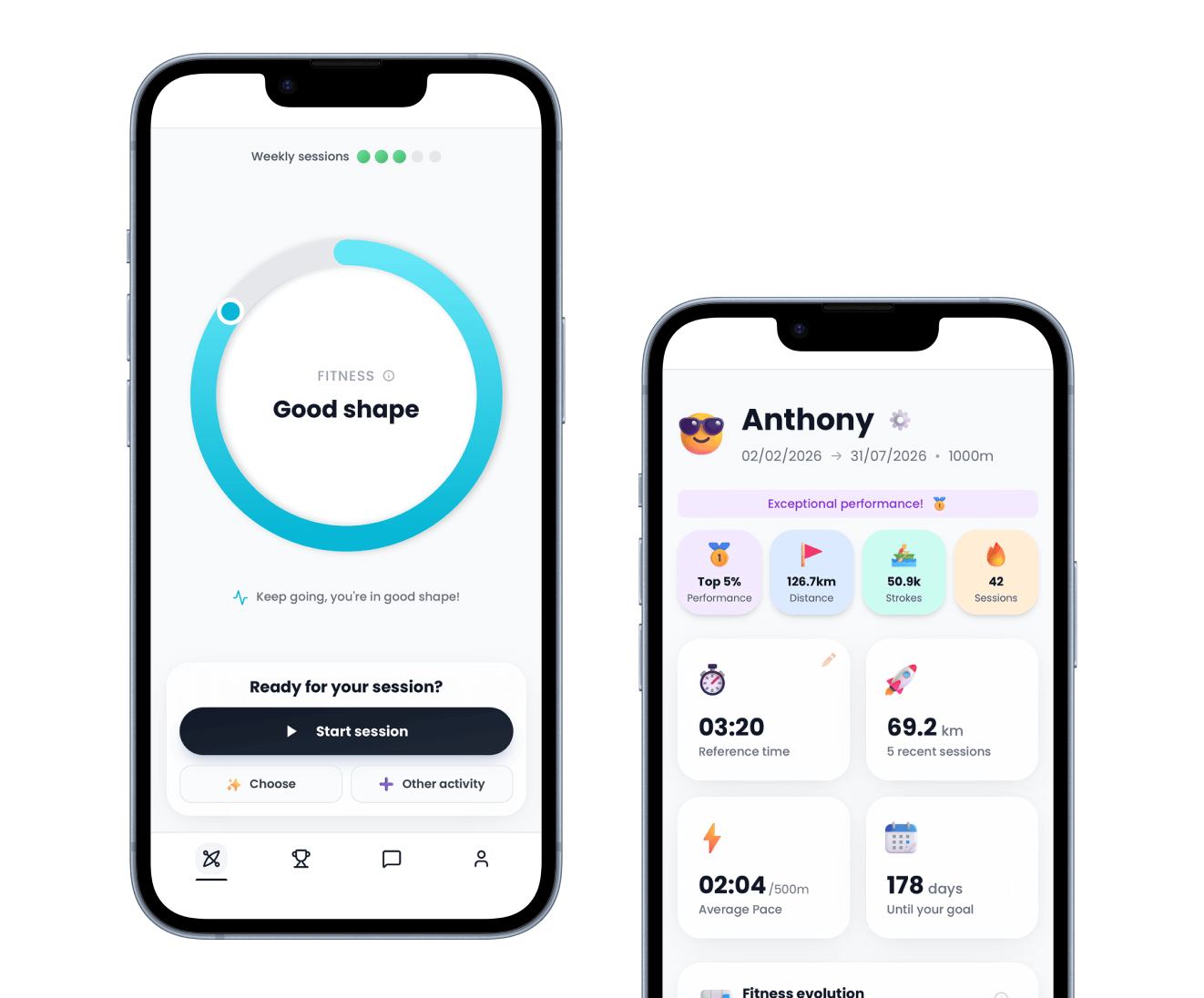

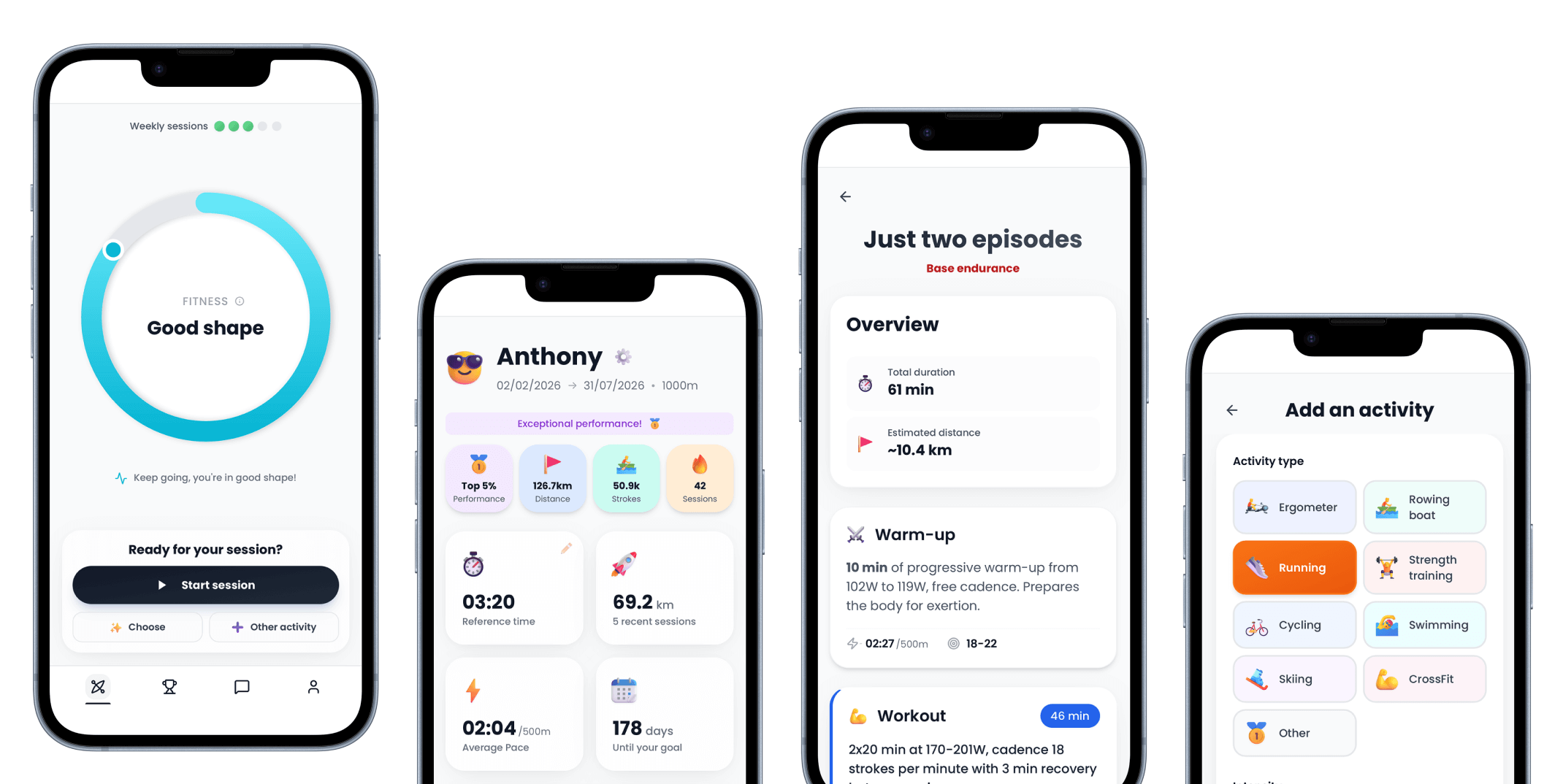

A rowing training app that builds personalised plans from elite coaching principles. I used this project to test how far AI-assisted development can go when guided by clear product thinking, going from zero to a working prototype in about twenty five hours.

~25h

From idea to working prototype

End-to-end

Complete product experience

Product

A training app for rowers built around one simple loop: set a performance goal, follow a structured plan, and adapt sessions based on real fatigue. Workouts are designed from coaching programs defined with Maxime Ducret, a multiple French rowing champion and full-time coach.

Same experience across web, iOS, and Android.

My role

- • Product strategy: focused on individual rowers

- • UX design: structured the app around goals and fatigue tracking

- • Implementation: built the full stack using Cursor, Supabase, and Vercel

- • Domain integration: turned coaching principles into usable app features

Context

- • Built evenings and weekends as a side project

- • Collaboration with a rowing coach and rower friends for expertise

- • Initial focus: French-speaking rowers

- • Used as a testbed for AI-assisted product development

- • Scoped to explore product and technical execution rather than growth

How Splash works

Set a goal, follow a structured plan, and let your actual fatigue guide what comes next.

Set your goal

Choose a distance (500m, 1k, 2k, or 6k), enter your current time, and pick a target date. Splash calculates a realistic improvement target based on training science.

Get your plan

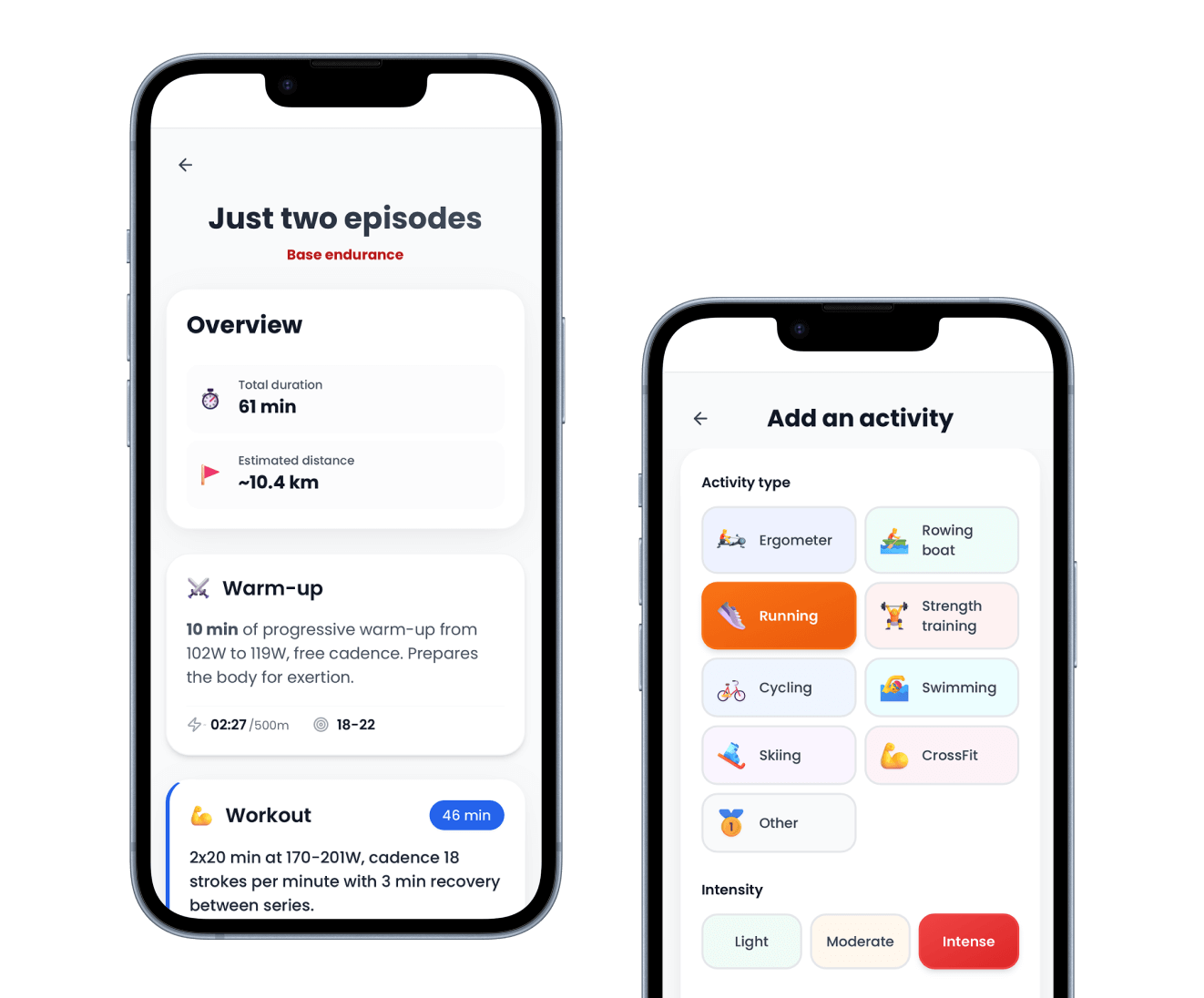

The app generates workouts using Maxime's coaching programs. Each session is adapted to your current level and goal.

Train and adapt

Log your sessions, rowing or other sports. The app tracks fatigue and recommends which type of workout to do next, adjusting the plan to fit how you're actually feeling.

Context

The opportunity

Runners and cyclists have dozens of training apps. Rowers mostly don't. Many rowers share generic workouts and lack week-to-week coaching guidance. They repeat the same sessions without knowing if they're on track for their goals.

Starting point

Conversations with rower friends revealed a clear need: a way to get personalised, rowing-specific plans that adapt to life's chaos. We asked "What should I train this week to hit my goal?" and built around that question.

Challenge

How do you deliver quality coaching guidance without pretending AI can replace a human coach?

Splash was an experiment with two goals. First: solve a niche problem for rowers that existing fitness apps ignore. Second: stress-test AI-assisted development on a real product, not a toy example.

Rowing-specific

Create something that feels native to the sport, not like a running app with an "indoor rowing" toggle.

Structured but flexible

Give self-coached rowers a coherent training structure without locking them into a rigid plan that ignores reality.

AI-assisted, human-led

Use AI to accelerate development, but keep all product decisions and architectural choices firmly in human hands.

Approach

What I did

Cut ruthlessly

Product strategy

I stripped the scope down to one core flow: set a goal, get a plan, follow sessions that adapt to fatigue. Just the athlete's training loop, nothing more.

Result: A focused scope that could actually be built end-to-end in days, not weeks.

Encode expert knowledge

Domain modelling

I worked with three rowers, including Maxime, a multiple French champion who now coaches full-time. We translated his training principles into structured workouts: intensity zones, progression patterns, and race preparation logic. I then integrated these into the app in a way that makes them accessible and useful for rowers.

Result: The app recommends workouts based on coaching expertise, not generic templates.

Build around fatigue

Training experience

Rather than only tracking rowing volume, the app monitors fatigue across all activities: cycling, running, strength training. It uses this to adjust which rowing sessions to recommend next, mimicking how a coach would modify the week's plan when a rower arrives tired.

Result: Plans adapt to life, not just theory.

Ship with AI assistance

Execution

I used Cursor as an AI pair programmer, with Supabase and Vercel as the stack. Instead of wireframing everything upfront, I iterated on the running app: prompting for components, then refining, simplifying, and enforcing my own standards.

Result: Twenty five hours from idea to working prototype, complete with database, routing, and UI.

Impact

A complete prototype, not a mockup

Splash isn't slideware. Rowers can create goals, receive workouts, log sessions, and watch fatigue evolve. It's early-stage but complete enough to put in front of users for feedback.

Testable product, not concept art.

Expert logic in code

The prototype runs on training principles defined with coach collaborators. The system applies them consistently across different goals, athlete levels, and training rhythms.

Coaching expertise, packaged as software.

Proof point for AI-assisted dev

This project showed that AI coding tools excel at rapid prototyping, as long as someone owns scope, architecture, and code review. The goal wasn't "AI ships alone" but "how fast can we get to something testable?"

Product thinking becomes the bottleneck, not boilerplate.

Ready for validation

With the foundation in place (goals, plans, fatigue model, workout catalog), the next step is straightforward: test with rowers, collect feedback, and refine the experience.

Designed for iteration.

What I learned

AI redefines "first version"

With AI-assisted coding, a first version can be a functional app with database and navigation, not just wireframes. This makes it easier to test ideas in their actual form rather than relying on imagination.

Scope discipline matters more, not less

Faster building means it's easier to overbuild. Saying no to everything beyond the core training loop was essential. The focused version is what made this shippable in twenty hours.

AI is a fast junior, not a senior engineer

Tools like Cursor are excellent at drafting code, but they don't replace code review, naming conventions, or architectural decisions. Steering them well is its own skill. Without guardrails, they happily add complexity that serves no purpose.

Speed favours feedback over speculation

Getting to a testable app in twenty hours changes the equation. You can afford to learn from users much earlier instead of over-engineering on paper or trying to future-proof everything before shipping anything.